Community

From CPU to GPU in 80 Days

To learn more about multi-GPU programming in Palabos, register for the Summer School 2022

The Palabos GPU port: from CPU to GPU in 80 days

In 2021, Palabos received a GPU backend allowing to run existing Palabos programs in a multi-GPU environment with a single-line change. The specifics of the GPU port are summarized here.

| Repository of the GPU port: | https://gitlab.com/unigehpfs/palabos |

| Implementation paradigm: | C++ parallel algorithms |

| Type of GPUs: | So far tested on NVIDIA GPUs only (but the formalism is vendor independent) |

| Further information: |

About C++ parallel algorithms |

Palabos performance on GPU

We report here the performance obtained with the 3D cavity flow benchmark on a single A100 (40 GB) GPU and on a node with 4 A100 (40 GB) GPUs with NVLINK interconnect.

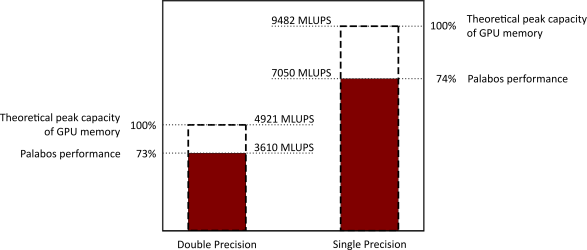

Single-GPU performance (A100 40 GB)

On a single A100 GPU, Palabos achieves 3610 MLUPS with double-precision floats and 7050 MLUPS with single-precision floats.

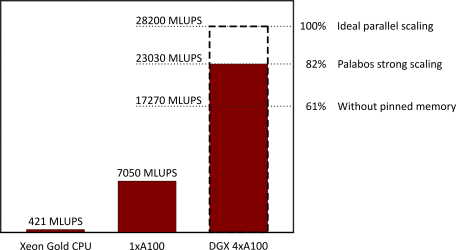

Performance on 4 A100 40 GB GPUs

The multi-GPU performance is tested in a strong scaling regime (overall problem size remains constant): the code runs more than 3 times faster than on a single GPU. Compared to a 48-core Xeon Gold CPU (executed with the original CPU-parallel version of Palabos), the 4-GPU code runs 55 times faster.

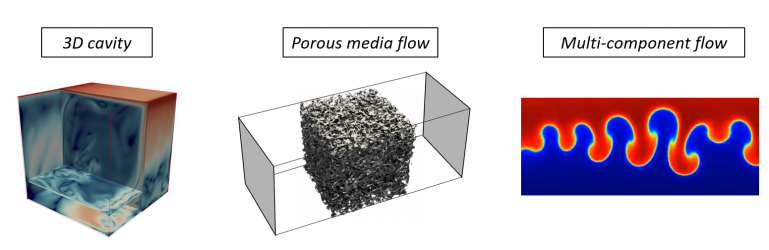

Test cases executed on GPU so far